University of Maryland researchers are presenting a study next week that measures machine learning interpretability—that is, how well computers can explain what they are thinking to users.

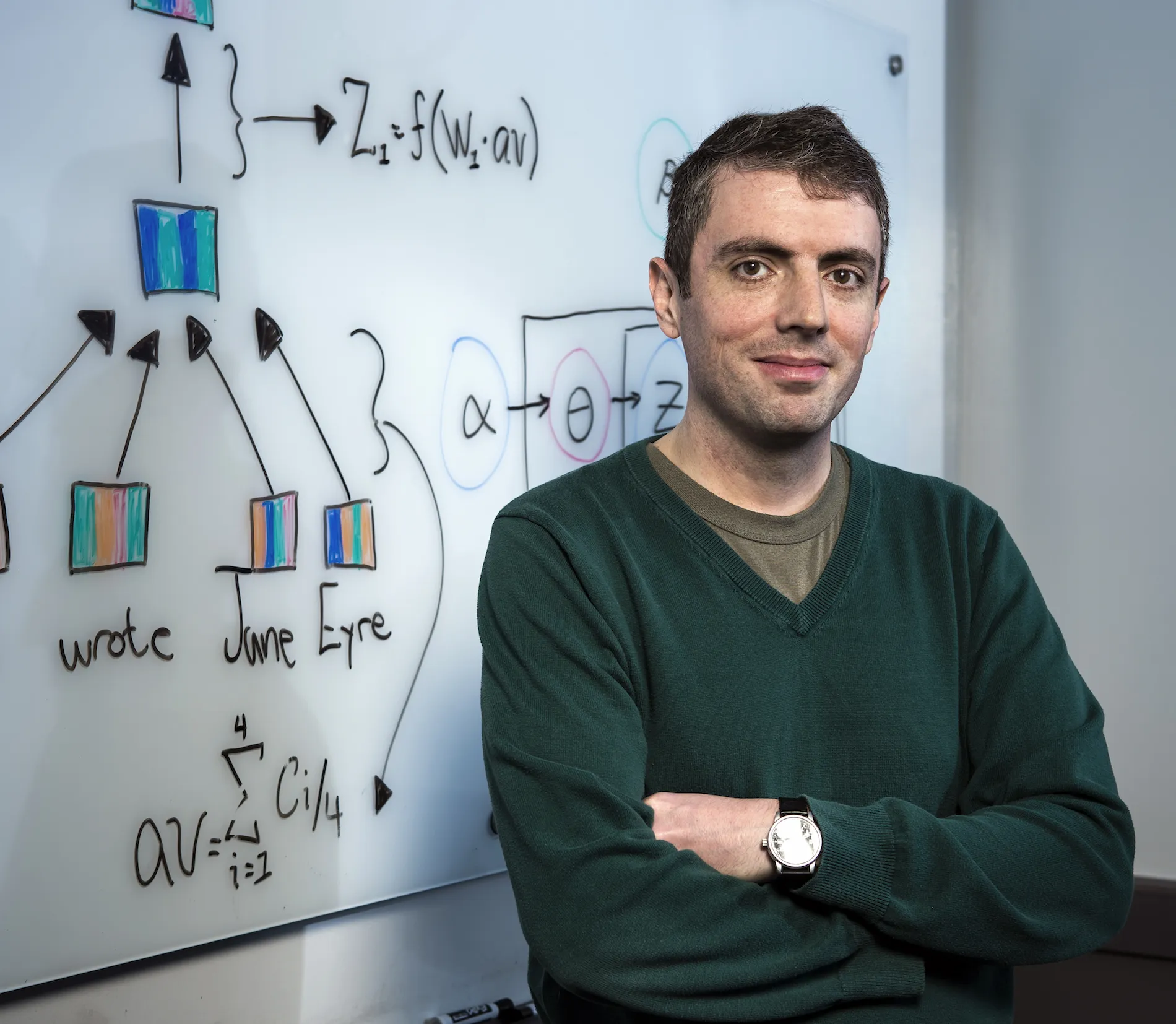

The study—by Jordan Boyd-Graber (in photo), an associate professor of computer science with appointments in the University of Maryland Institute for Advanced Computer Studies, the iSchool, and the Language Science Center, and Shi Feng, a third-year doctoral student in computer science—is based upon how well humans and computers play as a team.

“What can AI do for me?: Evaluating Machine Learning Interpretations in Cooperative Play,” evaluates the interpretability and utility of machine learning to maximize its effectiveness in real-life decision-making scenarios.

“AI systems are appearing in more and more places in our lives: they tell us what to binge watch next on Netflix and how to reply to e-mails,” says Boyd-Graber, who is Feng’s academic adviser. “How do we know if these systems are any good for the ways that we actually want AI to be used?”

To answer this question, the researchers created an evaluation of AI and their explanations based on how well a team consisting of a computer and a human play a game together. They paired volunteer subjects—both trivia experts and novices—with a computer to play a trivia question/answering game.

The researchers discovered that novices who are less familiar with the task often trust computer algorithms too much, and existing methods of interpretation only focus on what the machine predicts and not whether the machine should be trusted. And while experts are fooled less easily, the researchers say they want to see more in-depth explanation of what the machine is thinking so that they can vet the machine’s predictions.

Boyd-Graber and Feng will present their findings at the ACM Intelligent User Interfaces (ACM IUI) 2019 conference, to be held March 16–20 in Los Angeles. The annual ACM IUI meeting is recognized as a premier international forum for reporting outstanding research and development on intelligent user interfaces.